Building intelligentsystems thatmatter

AI/ML Engineer developing scalable AI/ML and intelligent systems that redefine how organizations process and understand information.

About

I focus on advancing memory-augmented LLMs and AI agents, currently building project-specific hierarchical memory systems for more coherent, long-term reasoning.

My past work spans vision models, multilingual RAG systems, and large-scale regressors, shaping my interest in scalable intelligence and how models store, update, and use structured knowledge effectively.

Featured Projects

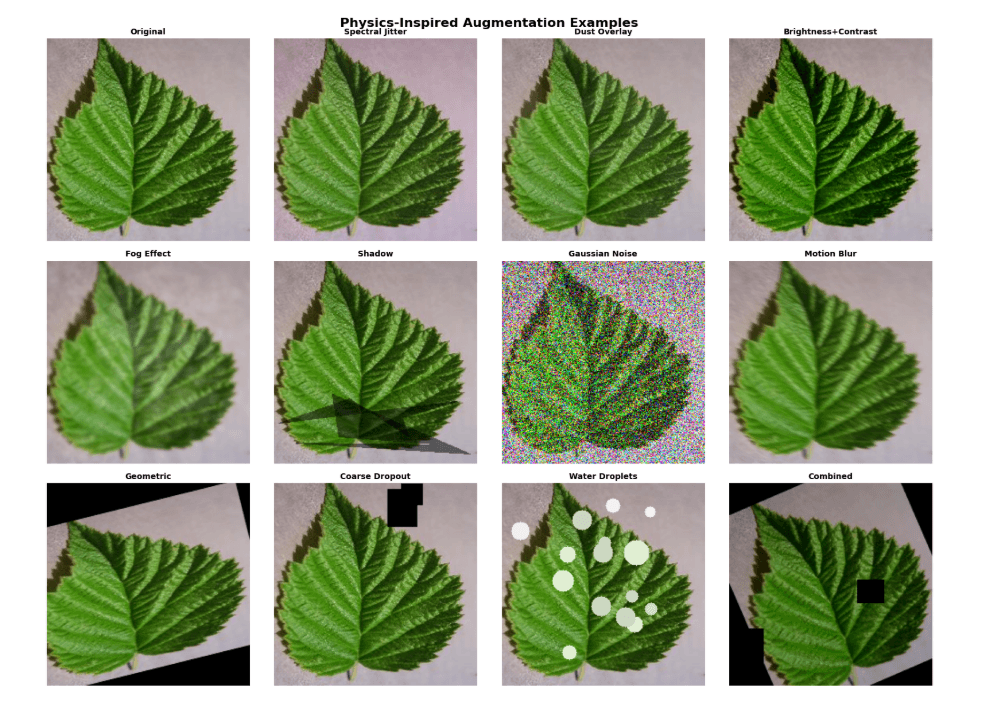

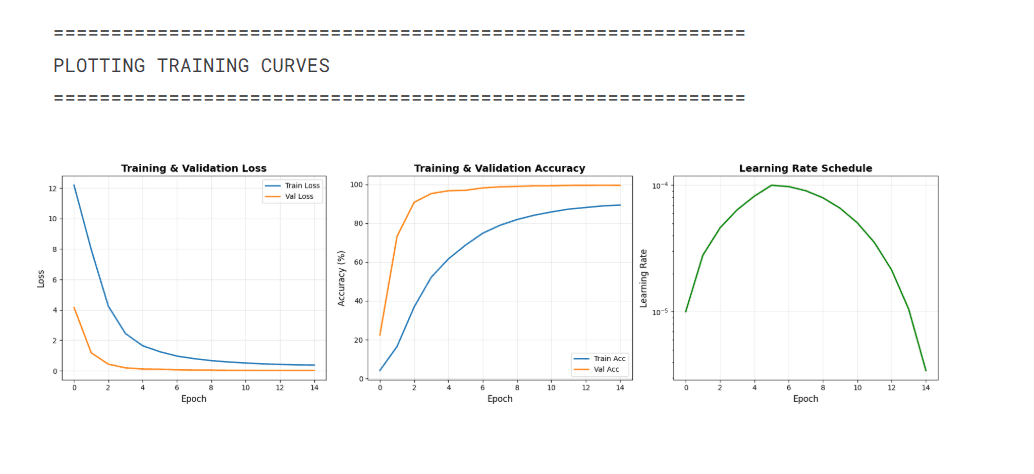

Hybrid ViT Model for Crop Diagnosis

Nov 2025Advanced Crop Disease Detection System

Designed a hybrid ViT + CNN + Custom MLP architecture that processes images in parallel to detect 38 crop disease classes, achieving 85% training accuracy and 99.5% validation accuracy. Developed an IoT-ready inference pipeline for real-time deployment with sensor integration and filed a patent covering the model architecture and end-to-end diagnostic workflow.

Key Metrics

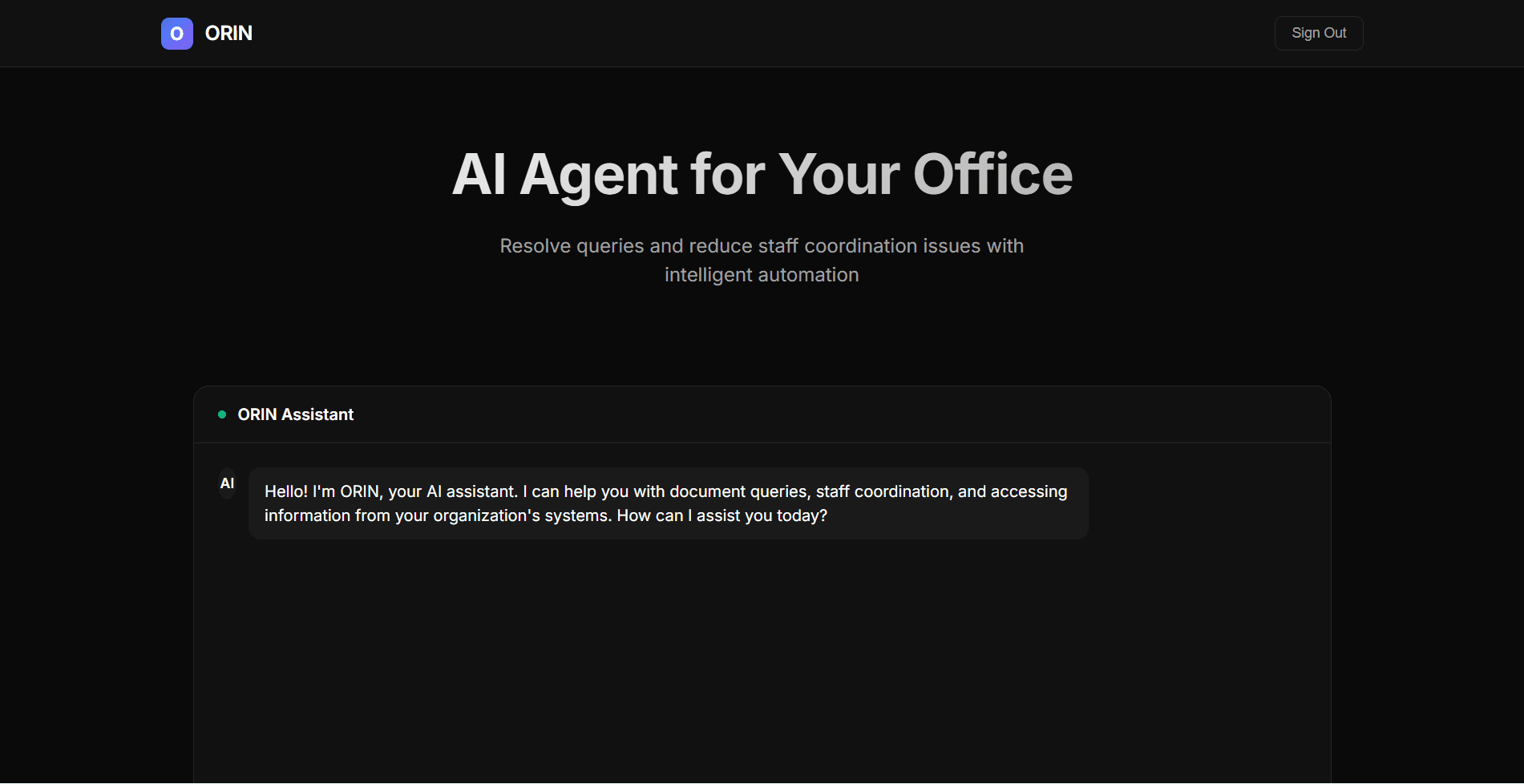

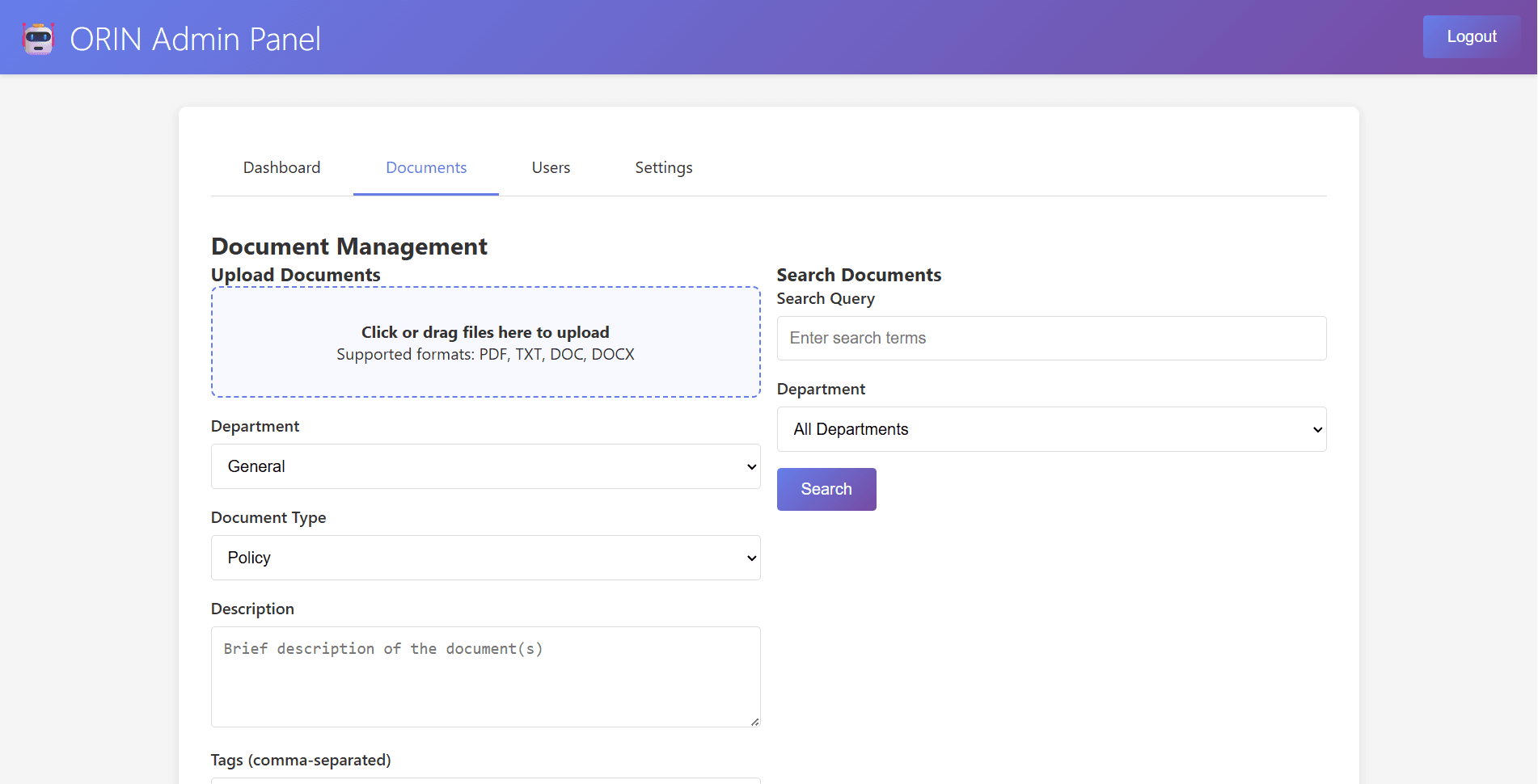

ORIN Tri-Sense AI

Oct 2025Multilingual RAG Chat-Runtime

Built and fine-tuned a multilingual RAG chat-runtime for universities, government, and private orgs (6+ languages). Optimized proprietary RAG (Pinecone) with tri-source retrieval and fine-tuning, reaching 86% relevance and 0.72 MRR. Scaled to 1k sessions / 200 QPS (latency<300 ms), reducing time-to-answer from 96 hours to 10 minutes.

Key Metrics

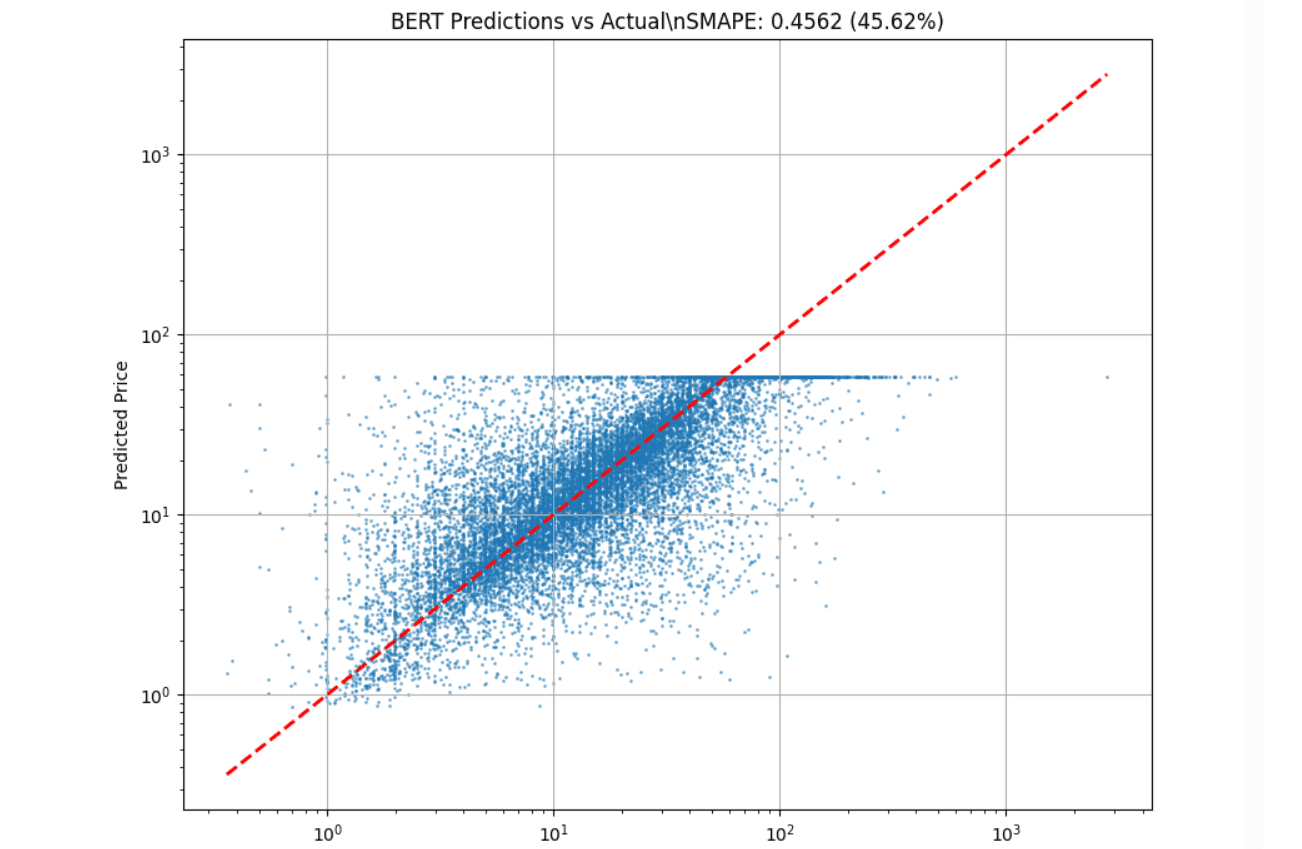

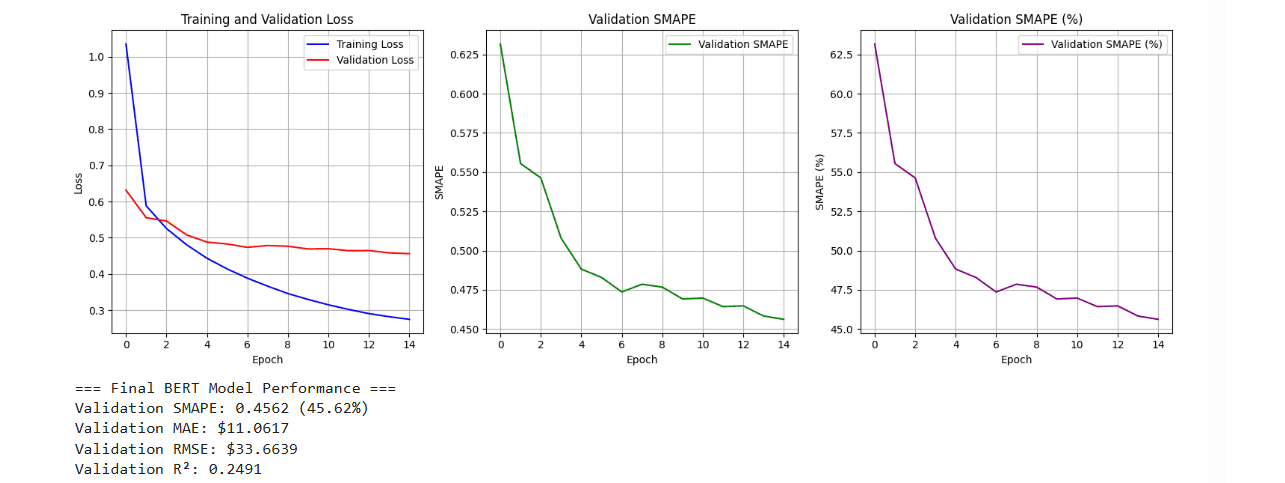

Product Price Regressor (DeBERTa-v3)

Oct 2025Price Prediction System

Designed and iteratively fine-tuned a DeBERTa-v3-base regression model over 30 epochs (15+7+8) on a 75,000-sample dataset for price prediction, ultimately achieving a final best validation metric of SMAPE 21.73% (down from initial 63.17%). Optimized training efficiency and stability on a Tesla P100-PCIE-16GB GPU using Automatic Mixed Precision (FP32-FP16), a 2e-5 learning rate, 0.01 weight decay, and gradient clipping (max_norm=1.0), with final architecture size being 183 Million Parameters.

Key Metrics

Skills & Technologies

Languages

AI/ML Frameworks

Platforms & Tools

Education & Certifications

Education

Bachelor of Technology

Computer Science and Engineering

Lovely Professional University • CGPA 8.33 • 2022-2026

Minor: Machine Learning and Deep Learning

Senior Secondary Education

Physics, Chemistry, Math, Computer Science

Sainik School Kapurthala • 2015-2022

Certifications & Achievements

Building Intelligent Troubleshooting Agents

Microsoft • Sep 2025

AI and Machine Learning Algorithms

Microsoft • Sep 2025

Generative AI with Large Language Models

DeepLearning.AI • Apr 2024

Microsoft Founder Hub Member

$20,000 in benefits and cloud credits

NCC A & B Certificates

6 years of service